Overcoming API Integration Challenges: Best Practices and Solutions

Best practices for successful API integration: Secure data with robust frameworks, seamless versioning, and more.

API integration plays a central role in modern software development by enabling applications to seamlessly communicate with each other and exchange data. APIs (Application Programming Interfaces) provide a standardized way for different software systems to interact and exchange information. By using APIs, developers can integrate external functions, services and data into their applications, saving time and effort.

The Importance of API Integration in Modern Software Development

API integration has become a fundamental aspect of modern software development for several reasons:

- Increased functionality: APIs allow developers to extend the capabilities of their applications by integrating with external services and systems. This integration enables the use of specific functions, such as payment gateways, social media sharing, mapping services, or weather data. API integration allows developers to focus on developing core functionality while leveraging existing services.

- Streamlined development: APIs provide pre-built functionality and resources so developers do not have to reinvent the wheel. They can use APIs to access complex services, databases, or algorithms without having to develop them from scratch. This streamlines the development process, shortens development time, and enables faster time-to-market for applications.

- Data exchange and collaboration: APIs facilitate the seamless exchange of data between different systems. They enable applications to share information, synchronize data, and collaborate with external platforms. This ability to integrate and share data plays a critical role in building connected ecosystems, enabling applications to collaborate, and delivering a consistent user experience across multiple platforms.

While API integration offers numerous benefits, it also presents some challenges that developers must overcome. Understanding and overcoming these challenges are critical to successful API integration. When developers understand these challenges and apply effective strategies, they can ensure smooth and successful API integration in their applications.

Understanding API Integration Challenges

Incompatible Data Formats and Protocols

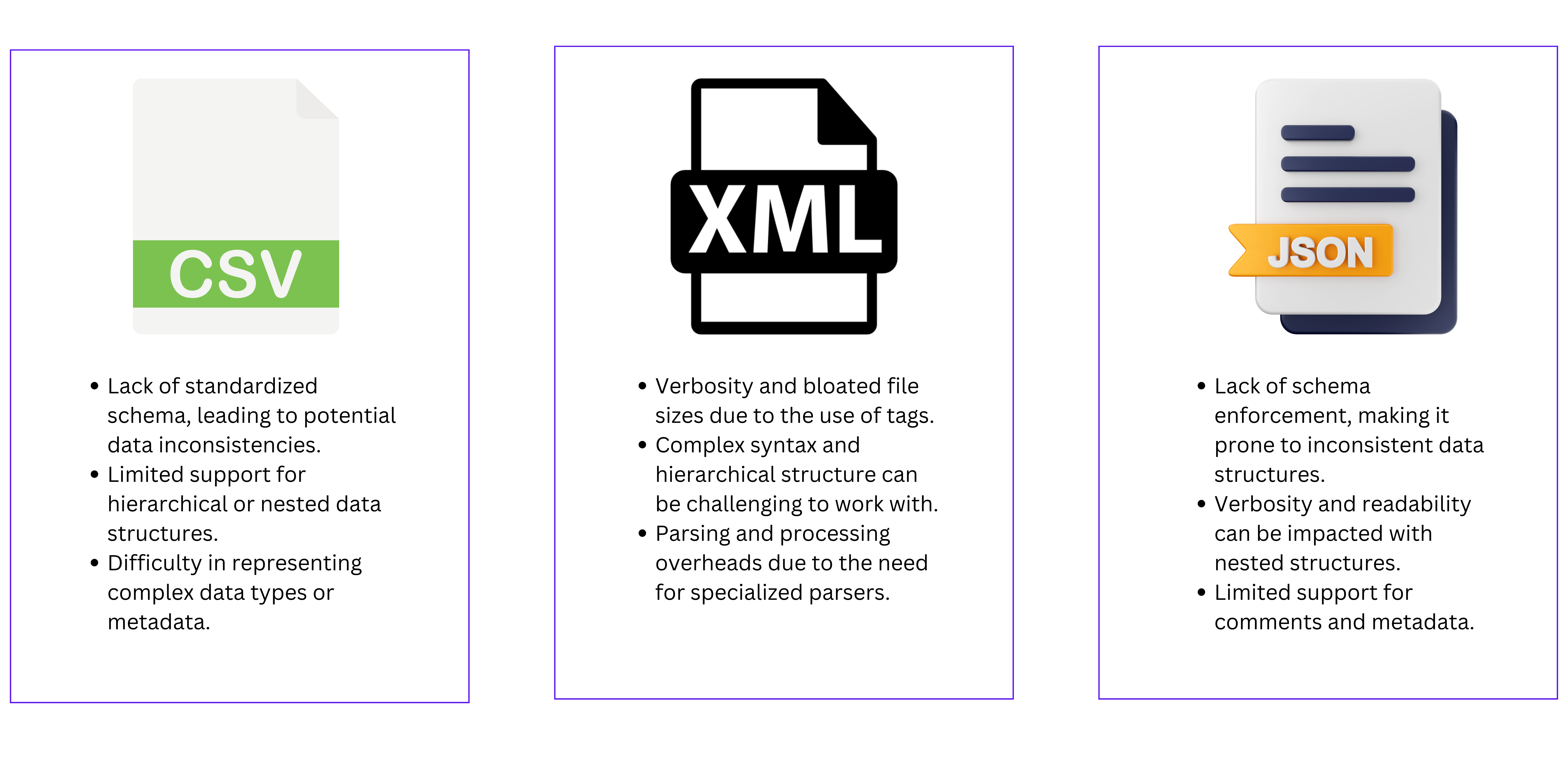

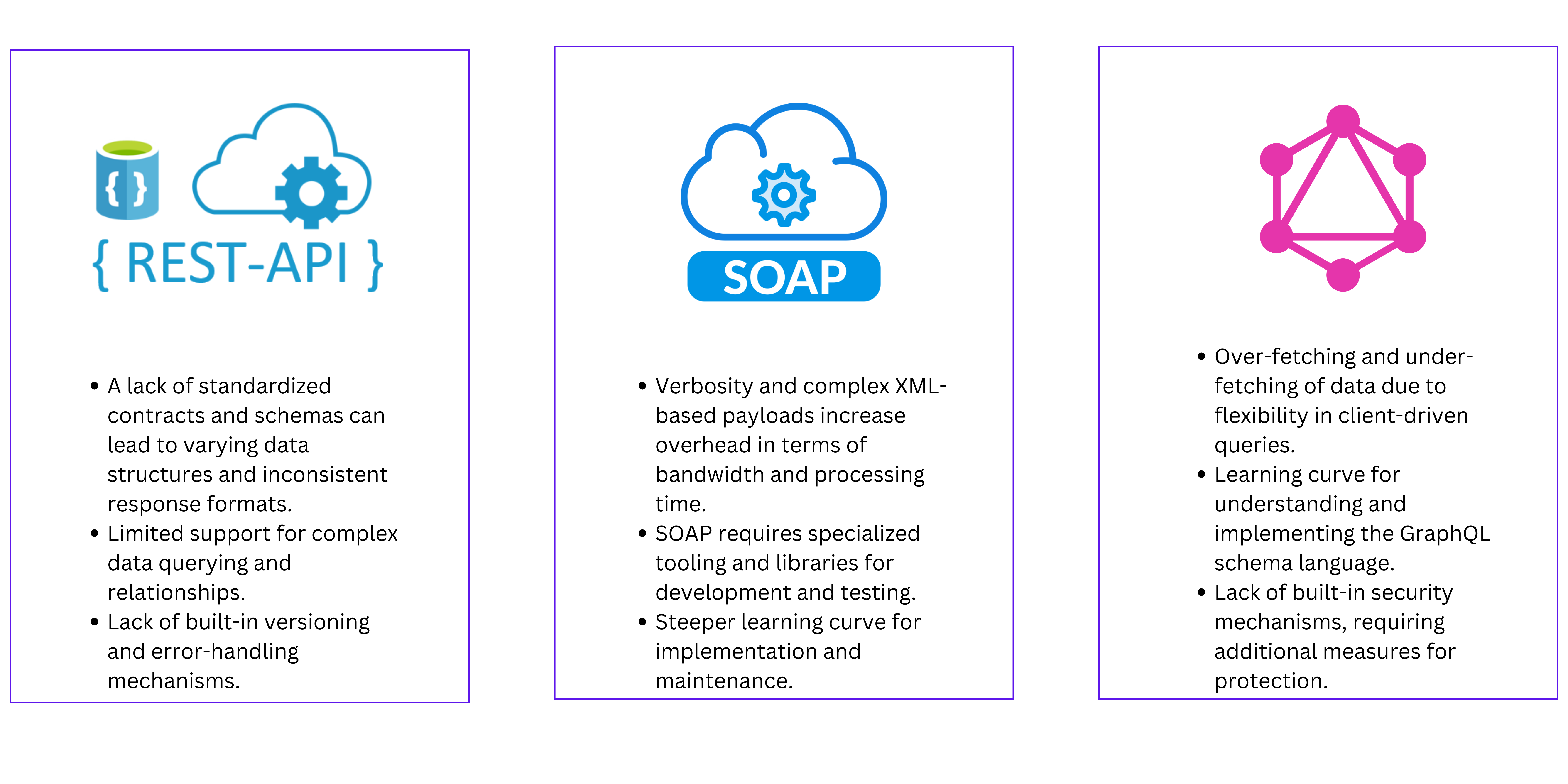

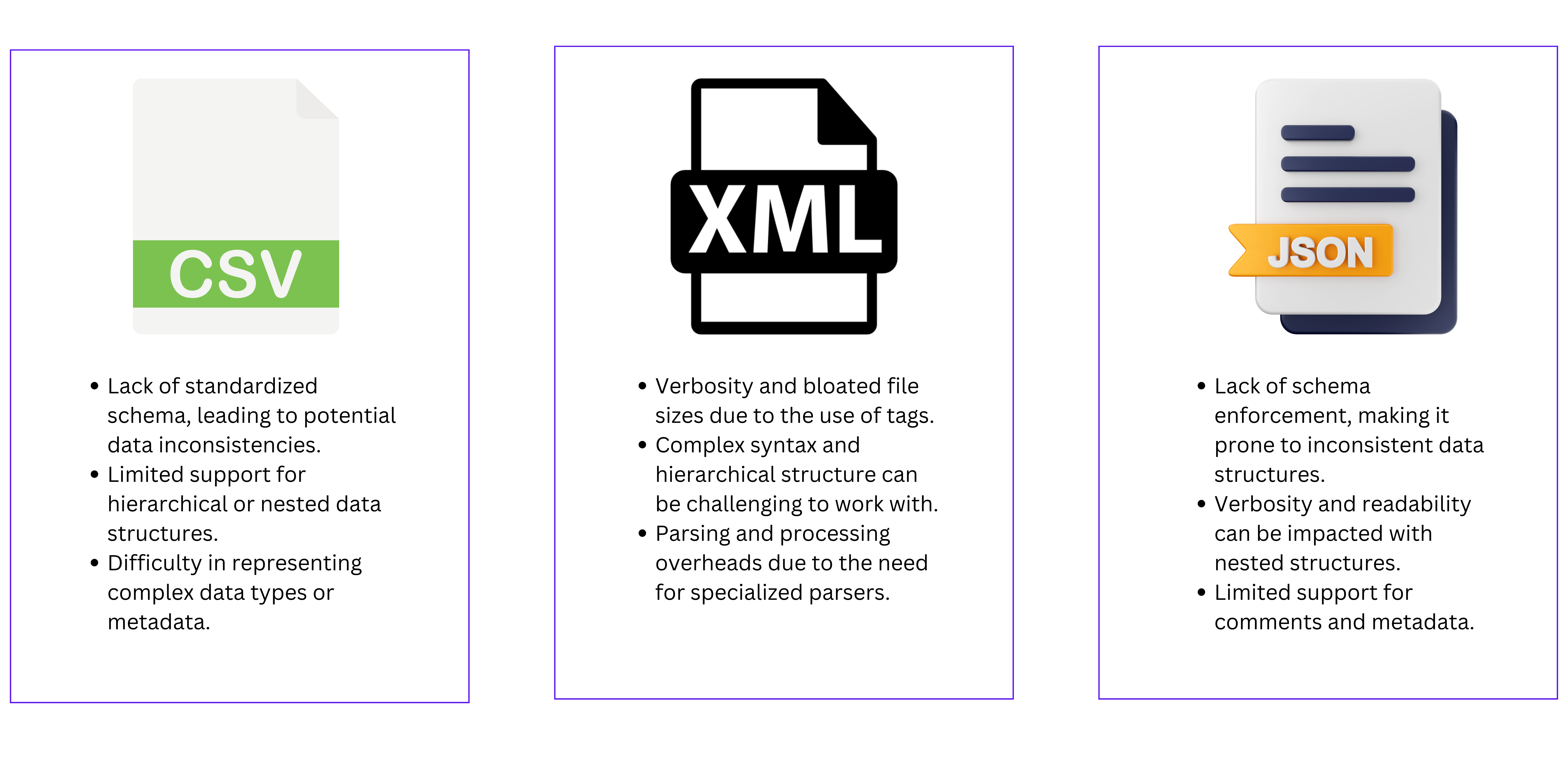

One of the biggest challenges with API integration is dealing with incompatible data formats. APIs often use different formats for data representation, such as JSON, XML, and CSV. JSON (JavaScript Object Notation) has become popular because of its simplicity and ease of use. It provides a lightweight and human-readable format for structured data, making it highly compatible with modern web applications. XML (eXtensible Markup Language), on the other hand, is a versatile markup language known for its hierarchical data representation. Although XML was widely used in the past, it has been gradually replaced by JSON due to its greater simplicity and efficiency in parsing. In addition, CSV (Comma-Separated Values) is a simple text format that is commonly used for data exchange, especially in scenarios where a hierarchical structure is not required.

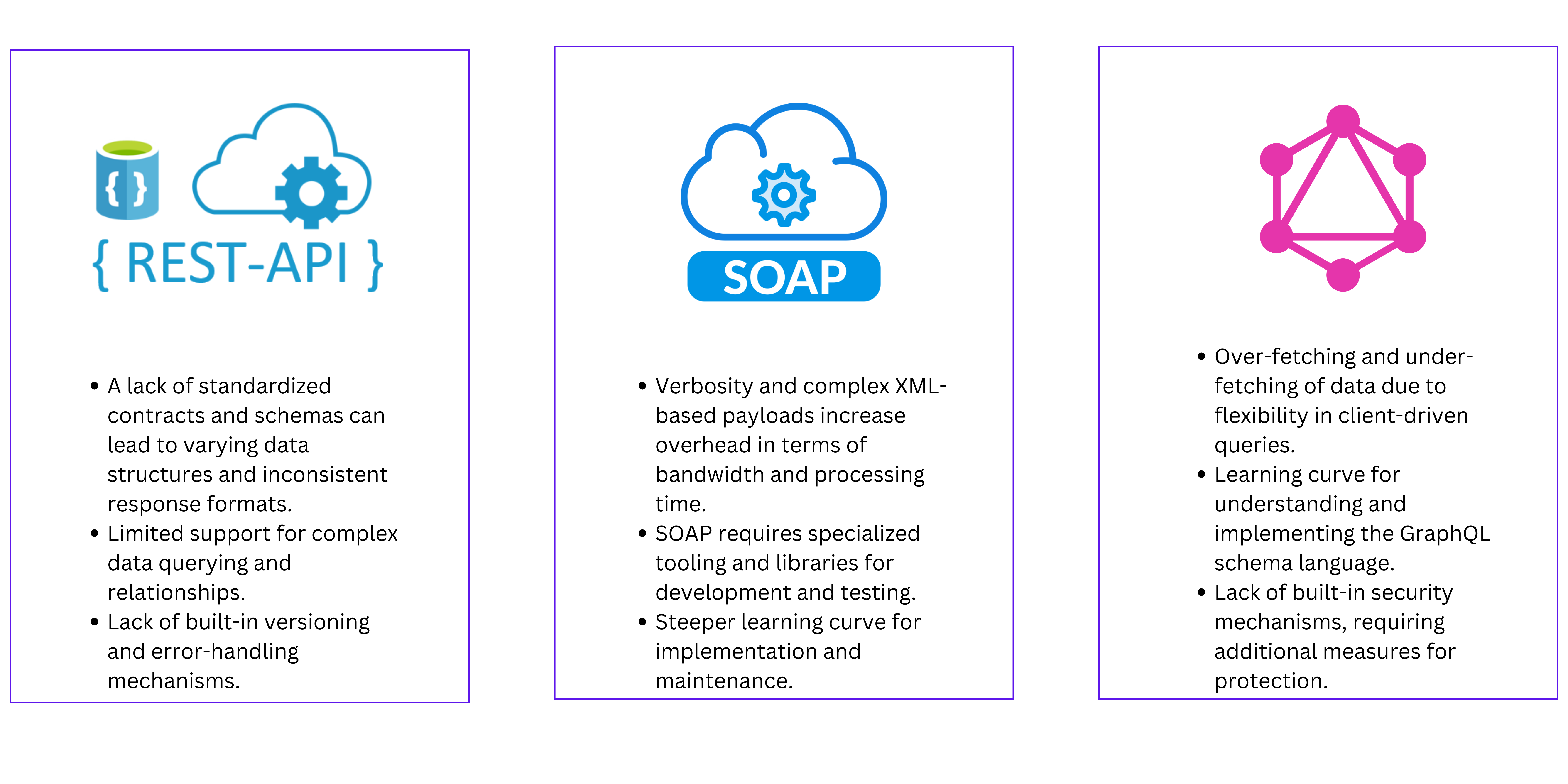

In addition to data format challenges, another major hurdle to API integration is the differences between communication protocols. APIs can use different protocols, each with its own characteristics and requirements. The most common protocols that you'll probably be familiar with are REST (Representational State Transfer), SOAP (Simple Object Access Protocol), and GraphQL. REST graphQL, which is based on the HTTP protocol, provides a simple and lightweight approach to building APIs. It emphasizes statelessness, scalability, and the use of standard HTTP methods such as GET, POST, PUT, and DELETE to interact with resources. SOAP graphQL, on the other hand, relies on XML for message exchange and often requires complex XML schemas and Web Services Description Language (WSDL). GraphQL, a relatively new protocol, introduces a query language for APIs that allows clients to query specific data in a flexible and efficient manner.

Solutions for Handling Data Format and Protocol Discrepancies

To overcome the challenges posed by incompatible data formats and protocols, experienced API experts have several solutions at their disposal. Data transformation is an important technique that allows you to convert data seamlessly between different formats. By using libraries and frameworks, you can effectively parse and serialize data in different formats, ensuring compatibility between the API and your application. Another useful approach is to use adapter patterns. By encapsulating the logic required to translate data formats or protocols, adapters act as intermediaries between your application and the API. They perform the necessary conversions, enabling seamless integration while maintaining the integrity of the underlying systems. In addition, middleware solutions and integration tools provide built-in support for handling various data formats and protocols. These tools provide pre-built connectors and converters that simplify the integration process and save valuable development time.

By understanding these challenges and deploying appropriate solutions, you can overcome the complexity of incompatible data formats and protocols in API integration.

Authentication and Authorization Issues

Different Authentication Mechanisms (API Keys, OAuth, JWT)

Authentication mechanisms play a critical role in ensuring secure access and protection of sensitive data during API integration. Common authentication methods include API keys, OAuth, and JSON Web Tokens (JWT).

API keys are unique identifiers issued to applications or users for authentication. They act as credentials and are typically included as parameters or headers in API requests. API keys validate the identity of the requestor and grant access based on the specified key.

OAuth, an industry-standard protocol, allows users to grant limited access to their resources without revealing their credentials. It involves obtaining access tokens and updating them periodically. OAuth's authorization flow separates the authentication process from access authorization, which increases security and allows users to control the amount of access granted.

JWT (JSON Web Tokens) is a compact and self-contained token format used for authentication and authorization. JWTs contain encoded information about the user or application and are signed to ensure integrity. They contain relevant information such as the user's identity, access permissions, and expiration times, and enable stateless authentication.

Challenges Related to Authentication and Authorization

Authentication and authorization can present certain challenges in API integration. Some common challenges are:

- Credential management: Proper storage and management of API keys, OAuth tokens, or JWT secrets is critical. It is very important to employ robust key management practices, secure storage mechanisms, and regular credential rotation to mitigate the risk of unauthorized access.

- Token management: Handling access tokens, monitoring their expiration, and ensuring seamless token updates can be complex. You may face the challenge of storing and transferring tokens securely and implementing efficient mechanisms for retrieving and updating tokens to ensure uninterrupted API access.

- Scope and granularity: APIs often require fine-grained control over the permissions and access rights granted to different users or applications. Balancing the need for flexibility with the complexity of managing granular permission rules can be challenging. You have probably dealt with defining and enforcing access policies to ensure that users or applications can access only the resources they need, while preventing unauthorized access.

Best Practices for Secure Authentication and Authorization

It's critical that you adhere to best practices for secure authentication and authorization during API integration. Here are some key practices:

- Use secure channels: Always transmit sensitive authentication data over secure channels such as HTTPS to protect it from eavesdropping and tampering. Encrypting communications ensures the confidentiality and integrity of authentication requests and responses.

- Implement strong identity and access management: Deploy robust identity and access management (IAM) solutions that enforce strong authentication mechanisms such as multi-factor authentication (MFA). IAM frameworks provide comprehensive user management, centralized access control, and auditing capabilities.

- Leveraging industry standards: Adherence to established standards such as OAuth and JWT promotes interoperability, ensures compatibility with multiple API providers, and benefits from the collective experience of the developer community.

- Regularly review and renew credentials: Review and change API keys, tokens, or secrets regularly to minimize the impact of potential security breaches. Implementing automated credential rotation mechanisms, such as scheduled key rotation or token expiration policies, helps maintain the security of your API integration.

By following these best practices, you can increase the security of your API integration, protect sensitive data, and reduce the risks associated with authentication and authorization issues.

Versioning and Backward Compatibility

Challenges Related to API Versioning and Compatibility

Managing versioning and ensuring backward compatibility can be complex. As APIs evolve and new features are introduced, maintaining compatibility is critical to prevent existing integrations from being broken. Some challenges that arise in this context are:

- Compatibility with existing clients: Existing clients that may have been developed using older versions of the API must continue to function seamlessly when API updates are introduced. It must be ensured that new versions do not introduce disruptive changes or unexpected behavior.

- Communication and documentation: Effectively communicating changes and updates to API customers can be challenging. Providing clear release notes and documentation highlighting behavioral changes, deprecated features, or new requirements is critical for developers to update their integrations accordingly.

- Dependency management: APIs often rely on third-party libraries or services that may have their own versioning schemes. Coordinating version updates between different dependencies can get complicated, especially when multiple integrations are involved.

Strategies for Managing Versioning and Backward Compatibility

To overcome these challenges and effectively manage API versioning and backward compatibility, developers can use the following strategies:

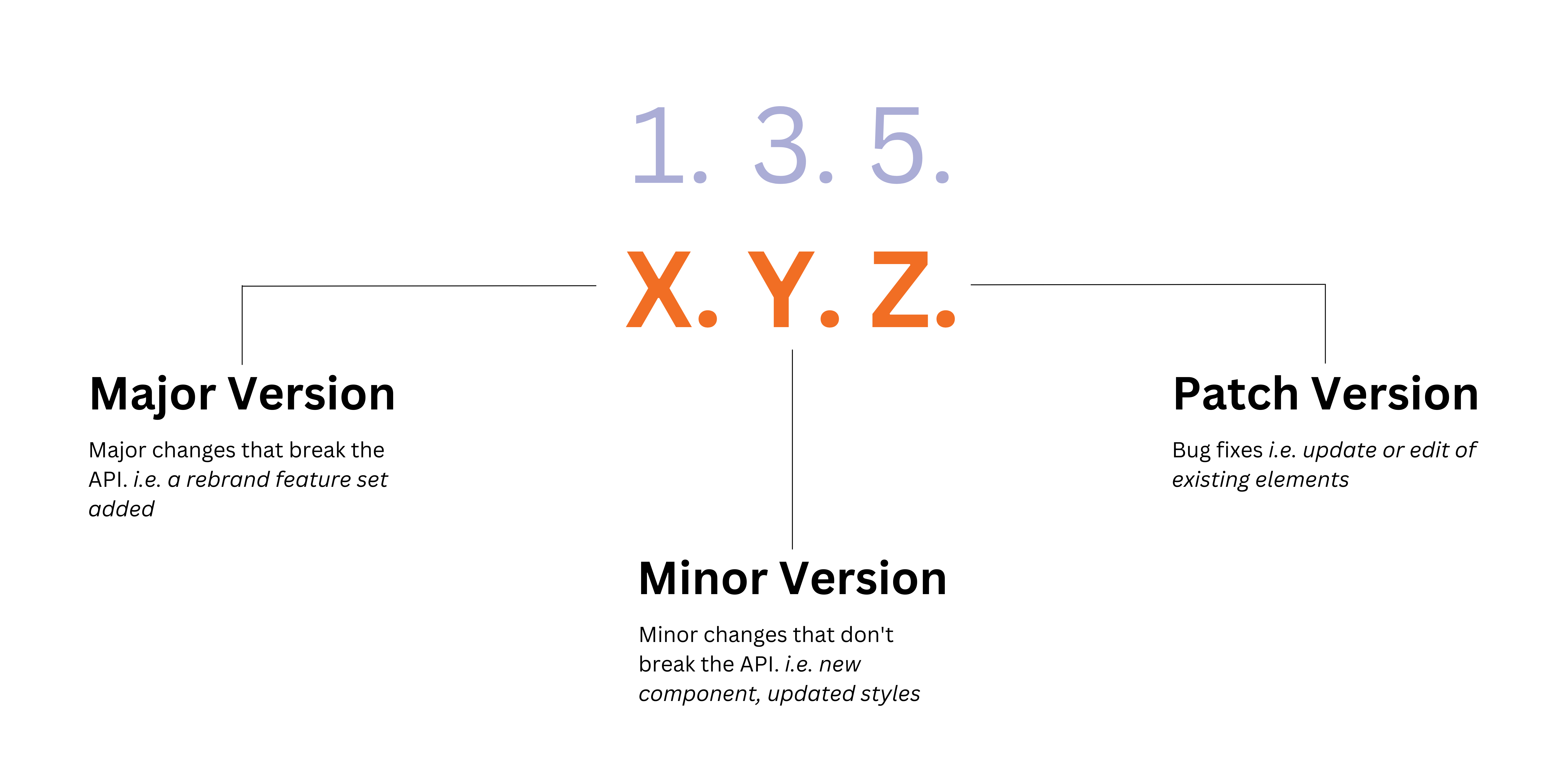

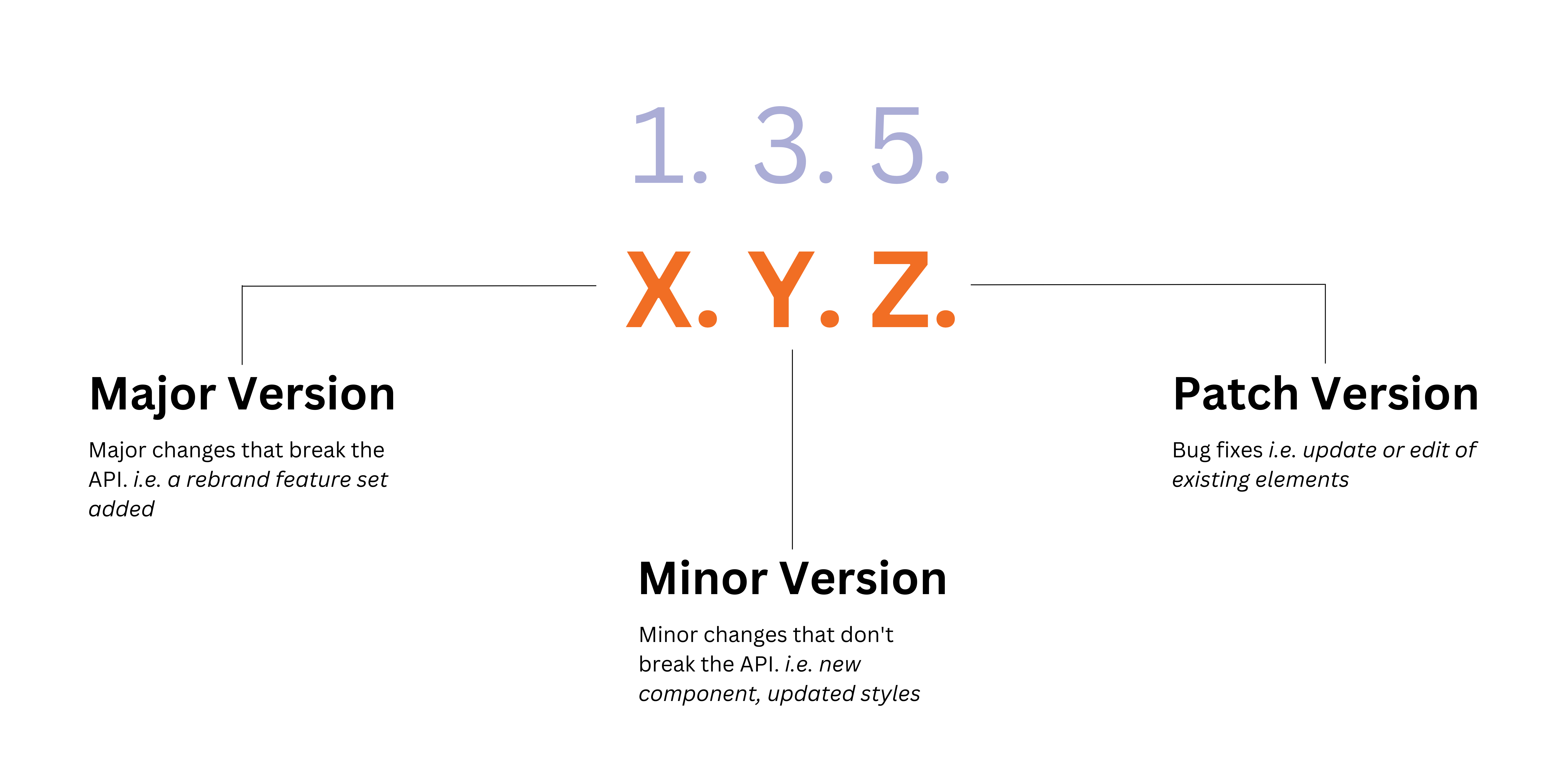

- Semantic versioning: The introduction of semantic versioning (SemVer) helps provide a clear and standardized approach to versioning. SemVer consists of major, minor, and patch versions and allows developers to accurately communicate the nature of changes. This approach helps API users understand the impact of version updates and make informed decisions about integration updates.

- Versioning via URL or header: Include version information in the API URL or header. By including a version identifier in the URL or headers, developers can ensure that clients can explicitly specify the desired API version when making requests. This allows clients to customize their integrations to specific versions while accommodating future updates.

- Robust testing and integration suites: Implement comprehensive testing strategies, including integration testing, to validate API compatibility and functionality across versions. Automation and continuous integration techniques can help identify compatibility issues early in the development cycle.

- Phase-out and deprecation periods: When introducing drastic changes, specify a deprecation period and a clear schedule for removing deprecated features. This allows developers using older versions to incrementally upgrade their integrations and reduce the impact on their applications.

- Documentation and communication: Maintain detailed and up-to-date documentation that clearly explains release policies, deprecated features, and upgrade paths. Establish effective communication channels, such as release notes and developer forums, to inform API customers of changes and provide support during the transition.

Introduction to Semantic Versioning and Its Benefits

Semantic versioning (SemVer) is a versioning scheme that provides a standardized and intuitive approach to API versioning. It consists of three components: major version, minor version, and patch version. Each component conveys specific information about the type of changes introduced with a new version.

- Major version: Increase the major version when introducing backward-incompatible changes. This means that significant changes have been made to the API that may require updates to client integrations.

- Minor version: increase the minor version if you are adding new features or functionality in a backward compatible manner. This indicates that new features have been added without affecting existing integrations.

- Patch version: Increase the patch version for backward-compatible bug fixes and minor updates that do not introduce new features or groundbreaking changes.

The introduction of semantic versioning brings several benefits to API developers and customers. It enables clear communication about the impact of version updates, making it easier for developers to understand the scope and nature of changes. Semantic versioning also helps API customers make informed decisions about when and how to update their integrations, minimizing the risk of unexpected behavior or erroneous changes. It also establishes an industry standard practice for versioning that promotes consistency and compatibility across different APIs.

By following these strategies and embracing semantic versioning, developers can effectively manage versioning and backward compatibility to ensure smooth transitions and minimize disruption to existing integrations.

Rate Limiting and Throttling

Understanding Rate Limiting and Throttling Concepts

Rate limiting and throttling mechanisms are essential for maintaining API stability, performance, and security. Rate limiting is the restriction on the number of requests a client can make to an API within a given time period. Throttling, on the other hand, regulates the speed at which requests are processed by the API server.

Rate limits are usually set by the API provider and are often based on factors such as the user's subscription level, the type of API endpoint, or the specific API function being used. These limits are put in place to prevent abuse, protect server resources, and ensure fair usage by all API customers. Throttling, on the other hand, controls the speed at which requests are processed to prevent server overload and maintain overall system performance.

Challenges and Consequences of Exceeding API Usage Limits

While rate limiting and throttling are necessary for maintaining a stable API ecosystem, exceeding these usage limits can have various challenges and consequences. When rate limits are exceeded, API requests can be denied or delayed, resulting in a degraded user experience. This can result in timeouts, error responses, or even complete denial of access to the API.

In addition, exceeding API usage limits may result in penalties or restrictions imposed by the API provider. These penalties may include temporary or permanent suspension of API access, throttling of requests, or additional charges for excessive usage. In addition to the immediate consequences, there may be reputational damage and loss of trust among API users if limits are consistently exceeded.

Techniques for Handling Rate Limits and Optimizing API Usage

One approach is to implement efficient request management strategies, such as batch processing or pagination, to minimize the number of API calls required. This reduces the risk of hitting rate limits while improving overall efficiency.

Closely monitoring API usage is another important technique. By keeping track of your API usage, you can proactively manage your rate limits and plan accordingly. This includes regularly reviewing API usage reports, understanding the limits, and adjusting your application's behavior to stay within the set limits.

In order to appropriately handle rate limit errors, implementing appropriate error handling mechanisms is essential. This includes retries with exponential backoff and implementing circuit breaking patterns to handle temporary rate limit overruns.

In addition, exploration of alternative APIs or other service tiers that offer higher rate limits may be considered. This may mean negotiating with the API provider for higher limits based on your specific use case or exploring other APIs that better meet your needs.

Overall, API users need to understand the concepts of rate limiting and throttling, be aware of the challenges and consequences of exceeding limits, and be able to implement optimization techniques to effectively manage their API usage and ensure smooth and reliable integration into the API ecosystem.

Error Handling and Resilience

Common Errors and Exceptions in API Integration

Working with APIs can sometimes lead to errors and exceptions. These problems can occur for a variety of reasons, including incorrect request parameters, network connectivity issues, server-side errors, or even authentication and authorization issues. It is important to have a clear understanding of the most common errors and exceptions that can occur during API integration.

Among the most common errors is 400 Bad Request, which means that the server cannot understand or process the request due to invalid syntax or missing parameters. Another common error is 401 Unauthorized, which means that the request does not contain valid authentication credentials. Also 404 Not Found can occur, which means that the requested resource does not exist on the server. These are just a few examples, and it is important to be familiar with the different error codes and their meaning in order to handle them effectively.

Importance of Proper Error Handling and Resilience Mechanisms

It is critical to implement adequate error handling and failover mechanisms. Error handling ensures that problems encountered during API integration are handled appropriately and appropriate actions are taken to notify users or downstream systems of the errors encountered. Resilience, on the other hand, refers to the ability of a system to recover from errors and continue to function reliably.

Proper error handling helps maintain the stability and reliability of your API integrations. It allows you to provide your customers with meaningful error messages that help troubleshoot and reduce support efforts. Effective error handling can also improve the overall user experience by providing helpful information and guidance when errors occur.

Failsafe mechanisms, such as retrying failed requests, implementing breakers, or applying fallback strategies, are essential to ensuring the reliability and availability of your API integrations. By anticipating and planning for potential failures, you can minimize downtime and prevent cascading failures that can have a significant impact on your systems and users.

Implementing Effective Error Handling Strategies

Implementing effective error handling strategies is critical to the stability and reliability of your integrations. The following are some important considerations for implementing such strategies:

- Thoroughly understand the API documentation: Familiarize yourself with the API documentation to understand the expected behavior, error codes, and error messages returned by the API. With this knowledge, you can handle errors appropriately and provide relevant information to users.

- Proper handling of error responses: When an error occurs, make sure you analyze the error response and extract meaningful information from it. Use this information to create clear and concise error messages that can provide guidance to users or downstream systems on how to fix the problem.

- Ensure error logging and monitoring: Implement robust error logging and monitoring mechanisms to capture and track errors. This allows you to identify patterns, track recurring issues, and proactively address potential problems.

- Implement retries and exponential backoff: When transient errors occur, implement a retry mechanism with an exponential backoff strategy. This approach enables automatic retries while minimizing API impact and avoiding overload from repeated failed requests.

- Decent handling of rate limiting and throttling: APIs often impose rate limits or implement throttling mechanisms to protect their resources. It is critical that these scenarios are handled sensibly by adhering to the prescribed limits and implementing strategies to handle errors when the rate limit is exceeded.

- Communicate effectively with API providers: Create open lines of communication with API providers. Reach out to them when errors occur and ask for guidance or support. Building a strong relationship with the API provider can lead to faster resolution of issues and better collaboration.

By implementing these effective error handling strategies, you can ensure that your API integrations remain stable and reliable and provide a seamless experience for your users and downstream systems.

Best Practices for Overcoming API Integration Challenges

Importance of Comprehensive and Up-to-Date API Documentation

Comprehensive and up-to-date API documentation plays a critical role in successful integrations. Clear and detailed documentation not only provides valuable insight into the functionality of the API, but also helps you understand how to interact with it effectively. Comprehensive documentation covers all essential aspects of the API, including endpoints, request/response formats, authentication methods, error handling, and any specific nuances or limitations.

Having up-to-date API documentation is equally important. APIs evolve over time with updates, new features, and bug fixes. Outdated documentation can lead to confusion and inefficiency, which in turn leads to integration issues. Be vigilant and ensure that the documentation you rely on is regularly maintained and reflects the current state of the API. This includes documenting any changes or deprecations to endpoints, updated request/response formats, and changes to authentication mechanisms.

Tools and Techniques for Exploring APIs

It's always worth considering new tools and techniques that can improve your workflow and help you manage integration issues more efficiently.

API discovery tools such as Postman, Insomnia, or cURL remain invaluable for testing API endpoints, making requests, and checking responses. These tools provide features such as request history, parameter management, and response visualization that allow you to explore API functions more deeply.

Also, consider using API documentation tools such as Theneo. Theneo and tools like Theneo provide machine-readable specifications and allow you to automatically create client SDKs, server stubs and interactive API documentation. By using these tools and techniques, you can streamline your API discovery process, reduce manual errors, and improve overall integration efficiency.

Integration Testing and Monitoring

Significance of Thorough Integration Testing

Effective testing ensures that your integrations are robust, reliable, and resilient to changes in the API ecosystem. By performing comprehensive integration testing, you can identify potential issues early in the development process and mitigate risks before they impact your production environment.

To perform thorough integration testing, it's important to cover different scenarios, including positive and negative test cases. Positive tests verify that the API works correctly under normal conditions, while negative tests help uncover potential edge cases and validate error handling mechanisms. You should also test different input combinations, evaluate performance under different loads, and evaluate the API's compatibility with different environments.

Implement Automated Testing and Monitoring for APIs

When it comes to integration testing, automation can greatly increase efficiency and accuracy. By using tools such as testing frameworks, scripting languages, and continuous integration/continuous deployment (CI/CD) pipelines, you can automate test case execution, generate test reports, and ensure consistent and repeatable testing processes.

In addition, implementing API monitoring solutions is critical to maintaining the health and performance of your integrations. With real-time monitoring tools, you can track API uptime, response times, error rates and other key metrics. Proactive monitoring alerts you to potential issues before they impact your users, so you can take timely action to avoid disruption. By integrating monitoring into your API ecosystem, you can identify performance bottlenecks, detect anomalies, and make data-driven decisions to optimize your integrations.

Monitoring API Performance and Health

Monitoring API performance and health is a fundamental aspect of maintaining successful integrations. By continuously monitoring the performance of the APIs you integrate with, you can identify and address issues such as slow response times, high error rates, or data retrieval inconsistencies.

To effectively monitor the performance and health of APIs, consider using monitoring tools that provide comprehensive metrics and insights. These tools can track key performance indicators (KPIs) such as response time, latency, throughput and error rates. They can also help you identify trends, correlate performance with external factors and set up alerts for critical thresholds. By proactively monitoring API performance, you can ensure optimal user experiences, meet service level agreements (SLAs), and identify areas in need of optimization.

Prioritizing comprehensive and up-to-date API documentation, effectively exploring APIs, conducting thorough integration testing, and implementing automated testing and monitoring can help you overcome challenges with confidence. Monitoring API performance and health ensures the continued success of your integrations and enables you to deliver reliable, high-performing solutions to your users.

Implementing Middleware and Integration Layers

Middleware and integration layers play a critical role in the successful implementation of APIs. These layers act as intermediaries between different components, systems, or services, facilitating seamless communication and data exchange. Middleware acts as a bridge, providing a standardized interface and enabling interoperability between different systems. Integration layers, on the other hand, focus on integrating different systems, ensuring data consistency and streamlining business processes. By leveraging middleware and integration layers, enterprises can achieve greater flexibility, scalability, and efficiency in API integration.

Benefits of Using Middleware for API Integration

Using middleware for API integration brings several benefits to organizations that have extensive experience working with APIs. First, middleware simplifies the complexity of integration by abstracting the underlying technical details and allowing developers to focus on developing the core functionality of their applications. It also promotes code reusability and modularity because middleware components can be shared across different APIs and projects.

In addition, middleware provides a central point of control and management for API integrations and enables efficient monitoring, logging and error handling. It facilitates enforcement of security policies, data transformations, and protocol translations, and ensures seamless interoperability between systems with different architectures and technologies.

In addition, middleware solutions often provide advanced features such as message queuing, event-driven architecture and caching mechanisms that can improve the performance, reliability and scalability of API integrations. These benefits collectively contribute to accelerated development cycles, reduced maintenance requirements, and improved overall system stability.

Popular Middleware Solutions and Frameworks

There are several popular middleware solutions and frameworks that can help in creating robust and scalable integrations. Some of the notable options are:

- Apache Kafka: Kafka is a distributed streaming platform that excels in processing data streams with high throughput, fault tolerance and real-time. It offers a scalable pub/sub architecture, making it suitable for building event-driven systems and implementing reliable API integrations.

- MuleSoft Anypoint Platform: The Anypoint Platform provides a comprehensive set of middleware tools and services for API design, integration, management and analysis. It provides a unified platform for building, deploying, and monitoring APIs and enables seamless connectivity between different applications, services, and data sources.

- Spring Integration: Spring Integration is based on the popular Spring Framework and provides a lightweight yet powerful integration solution. It provides a set of components, patterns and connectors for building message-driven systems, processing data transformations and orchestrating complex workflows in API integrations.

- Microsoft Azure Logic Apps: Logic Apps is a cloud-based integration service offered by Microsoft Azure. It provides a visual designer and a wide range of connectors to create workflows and orchestrations that connect APIs, SaaS applications and on-premises systems. Logic Apps provides robust monitoring, debugging and error handling capabilities.

- IBM Integration Bus: IBM Integration Bus (formerly known as IBM WebSphere Message Broker) is an enterprise integration solution designed to connect applications, services and data across different platforms and protocols. It provides a wide range of connectors, transformation capabilities, and message routing features to enable seamless API integration in complex environments.

These middleware solutions and frameworks are just a few examples, and there are many more on the market. The choice of middleware depends on the specific project requirements, existing technology stack, scaling requirements and integration complexity. API developers should carefully review the features, capabilities, and community support of the various middleware options before selecting the one best suited for their API integration needs.

Implementing Retry and Circuit Breaker Patterns

Understanding Retry and Circuit Breaker Patterns

Retry and interrupt patterns are essential techniques for building resilient API integrations, especially in the face of transient outages and network problems. In the retry pattern, multiple attempts are made to execute a failed operation or request with the goal of eventually succeeding or providing a reliable fallback mechanism. On the other hand, the circuit-breaker pattern helps prevent cascading failures by intelligently handling repeated failures and temporarily "breaking" the circuit to prevent further requests until the system stabilizes.

Handling Transient Failures and Network Issues

API integrations often experience transient outages and network issues that can disrupt expected operations. Transient outages refer to temporary issues that prevent successful communication, such as network timeouts, temporary unavailability of services, or server errors. Implementing a retry mechanism allows the integration layer to automatically retry failed operations after a short delay or with an incremental backoff strategy. Retrying requests can increase the chances of success when transient problems resolve themselves.

Network issues such as high latency, packet loss, or network congestion can also affect API integrations. To mitigate the impact of these issues, implementing the circuit breaker pattern is beneficial. The circuit breaker monitors the success and failure rates of API calls and triggers when the failure rate exceeds a predefined threshold. When the circuit is open, subsequent requests are immediately rejected to prevent error propagation. After a certain period of time, the circuit enters a semi-open state that allows a limited number of requests to test the stability of the system. If these requests are successful, the circuit is closed again, otherwise it is reopened.

Applying Retry and Circuit Breaker Patterns in API Integration

To effectively apply retry and circuit-breaker patterns, API developers can use various libraries and frameworks that provide built-in support for these patterns. For example, libraries such as Polly for . NET or resilience4j for Java provide comprehensive support for implementing retry and circuit-breaker policies with configurable options.

When implementing retries, factors such as the maximum number of retries, backoff strategies, and handling exceptions and error codes must be considered. When implementing circuit breakers, appropriate thresholds, timeout periods, and strategies for transitioning between open, closed, and half-open states must also be defined.

To monitor the effectiveness of retry and interrupt patterns, it is advisable to incorporate appropriate logging and monitoring mechanisms. This helps identify error patterns, optimize configuration, and provides valuable insights into the stability and performance of API integrations.

By incorporating retry and interrupt patterns into API integrations, developers can significantly improve the resilience and reliability of their systems. These patterns mitigate the impact of transient outages, network issues, and system congestion, ensuring smooth operations and an improved end-user experience.

Caching and Data Synchronization

Utilizing Caching Mechanisms to Improve API Performance

Caching plays an important role in improving API performance. Caching involves storing data that is frequently accessed in a temporary storage layer so that the data does not need to be repeatedly retrieved from the original source. By implementing an effective caching mechanism, you can significantly shorten response time and reduce the load on your API servers.

To take advantage of caching, it is important to identify the right data elements for caching. This typically includes static or infrequently changing data that is shared across multiple API requests. Examples include reference data, configuration settings, or frequently used database queries. By storing such data in a cache, subsequent requests can be served directly from the cache without the need to perform expensive operations or query the underlying data source.

When implementing caching, factors such as cache expiration policies, swapping strategies, and cache invalidation mechanisms must be considered. Time- or event-based expiration policies ensure that cached data stays fresh, while swap strategies help control memory usage by removing less frequently accessed items. In addition, cache invalidation techniques such as cache tags or cache keys allow you to update or remove specific cache entries as the underlying data changes.

Implementing Data Synchronization Strategies for Distributed Systems

In distributed systems involving multiple API servers or microservices, ensuring data consistency and synchronization becomes a critical factor. Implementing effective data synchronization strategies helps harmonize data across these components and maintain a coherent view of the system.

A common approach is to use distributed consensus protocols such as the Raft or Paxos algorithms. These protocols allow multiple nodes to agree on the state of shared data by coordinating their actions over a series of communication rounds. They provide fault tolerance and ensure consistency even in the face of failures or network interruptions. However, it's important to evaluate the tradeoffs, as consensus protocols can introduce additional complexity and latency into the system.

Alternatively, an event-driven architecture can facilitate data synchronization in distributed systems. Events represent state changes or important actions within the system and can be forwarded to a message broker or event bus. Subscribed components can respond to these events and update their local data accordingly. This approach provides loose coupling between components and allows for eventual consistency, but requires careful design and consideration of event sequencing, delivery guarantees, and potential event loss scenarios.

Choosing Appropriate Caching and Synchronization Approaches

When it comes to choosing caching and synchronization approaches for your APIs, there is no one-size-fits-all solution. The optimal choice depends on a number of factors, including the nature of your data, expected usage patterns, and the performance requirements of your API.

For caching, you should use a combination of in-memory caches, distributed caches and content delivery networks (CDNs). In-memory caches, such as Redis or Memcached, are great for accessing low-latency data that is accessed frequently. Distributed caches, such as Hazelcast or Apache Ignite, provide scalability and fault tolerance across multiple nodes. CDNs are beneficial for caching static content or serving geographically dispersed users.

As for synchronization, you need to evaluate the requirements of your distributed system. If strong consistency is paramount, consensus protocols such as Raft or Paxos may be a good choice. On the other hand, if eventual consistency is enough, event-driven architectures with message brokers such as Apache Kafka or RabbitMQ offer flexibility and scalability.

Ultimately, the choice of caching and synchronization approaches should be based on your specific API use cases and performance goals. Consider performing performance testing and analysis to evaluate the impact of different strategies on your system's responsiveness, scalability, and data integrity.

Ensuring the security of sensitive data exchanged via APIs is a recurring problem that requires robust authentication mechanisms and encryption protocols. In addition, scaling API integrations to handle increasing traffic and evolving business needs requires careful architectural design and optimization. Another hurdle is keeping up with version changes and effectively communicating those changes to stakeholders, given the need to maintain backward compatibility and avoid disruption to existing users. Finally, the importance of thorough and up-to-date documentation cannot be overstated, as it facilitates seamless integration and helps developers understand the intricacies of an API.

Following best practices is critical to successful API integration. By following these practices, we minimize the risk of encountering common pitfalls and ensure smoother, more efficient integrations. First, implementing a robust security framework is critical to protecting sensitive data and guarding against unauthorized access. Using industry-standard authentication protocols such as OAuth and implementing encryption mechanisms such as SSL/TLS are key components of a secure integration. Second, a clearly defined versioning strategy allows APIs to evolve seamlessly while minimizing disruption to existing customers. Clearly documenting version changes and providing effective communication channels for developers helps manage these transitions effectively. Finally, investing in comprehensive and up-to-date documentation, including examples and use cases, improves the developer experience and accelerates the integration process. Continuously reviewing and updating documentation as APIs evolve ensures that accurate and reliable information is available to developers.

API integration plays a central role in modern software development by enabling applications to seamlessly communicate with each other and exchange data. APIs (Application Programming Interfaces) provide a standardized way for different software systems to interact and exchange information. By using APIs, developers can integrate external functions, services and data into their applications, saving time and effort.

The Importance of API Integration in Modern Software Development

API integration has become a fundamental aspect of modern software development for several reasons:

- Increased functionality: APIs allow developers to extend the capabilities of their applications by integrating with external services and systems. This integration enables the use of specific functions, such as payment gateways, social media sharing, mapping services, or weather data. API integration allows developers to focus on developing core functionality while leveraging existing services.

- Streamlined development: APIs provide pre-built functionality and resources so developers do not have to reinvent the wheel. They can use APIs to access complex services, databases, or algorithms without having to develop them from scratch. This streamlines the development process, shortens development time, and enables faster time-to-market for applications.

- Data exchange and collaboration: APIs facilitate the seamless exchange of data between different systems. They enable applications to share information, synchronize data, and collaborate with external platforms. This ability to integrate and share data plays a critical role in building connected ecosystems, enabling applications to collaborate, and delivering a consistent user experience across multiple platforms.

While API integration offers numerous benefits, it also presents some challenges that developers must overcome. Understanding and overcoming these challenges are critical to successful API integration. When developers understand these challenges and apply effective strategies, they can ensure smooth and successful API integration in their applications.

Understanding API Integration Challenges

Incompatible Data Formats and Protocols

One of the biggest challenges with API integration is dealing with incompatible data formats. APIs often use different formats for data representation, such as JSON, XML, and CSV. JSON (JavaScript Object Notation) has become popular because of its simplicity and ease of use. It provides a lightweight and human-readable format for structured data, making it highly compatible with modern web applications. XML (eXtensible Markup Language), on the other hand, is a versatile markup language known for its hierarchical data representation. Although XML was widely used in the past, it has been gradually replaced by JSON due to its greater simplicity and efficiency in parsing. In addition, CSV (Comma-Separated Values) is a simple text format that is commonly used for data exchange, especially in scenarios where a hierarchical structure is not required.

In addition to data format challenges, another major hurdle to API integration is the differences between communication protocols. APIs can use different protocols, each with its own characteristics and requirements. The most common protocols that you'll probably be familiar with are REST (Representational State Transfer), SOAP (Simple Object Access Protocol), and GraphQL. REST graphQL, which is based on the HTTP protocol, provides a simple and lightweight approach to building APIs. It emphasizes statelessness, scalability, and the use of standard HTTP methods such as GET, POST, PUT, and DELETE to interact with resources. SOAP graphQL, on the other hand, relies on XML for message exchange and often requires complex XML schemas and Web Services Description Language (WSDL). GraphQL, a relatively new protocol, introduces a query language for APIs that allows clients to query specific data in a flexible and efficient manner.

Solutions for Handling Data Format and Protocol Discrepancies

To overcome the challenges posed by incompatible data formats and protocols, experienced API experts have several solutions at their disposal. Data transformation is an important technique that allows you to convert data seamlessly between different formats. By using libraries and frameworks, you can effectively parse and serialize data in different formats, ensuring compatibility between the API and your application. Another useful approach is to use adapter patterns. By encapsulating the logic required to translate data formats or protocols, adapters act as intermediaries between your application and the API. They perform the necessary conversions, enabling seamless integration while maintaining the integrity of the underlying systems. In addition, middleware solutions and integration tools provide built-in support for handling various data formats and protocols. These tools provide pre-built connectors and converters that simplify the integration process and save valuable development time.

By understanding these challenges and deploying appropriate solutions, you can overcome the complexity of incompatible data formats and protocols in API integration.

Authentication and Authorization Issues

Different Authentication Mechanisms (API Keys, OAuth, JWT)

Authentication mechanisms play a critical role in ensuring secure access and protection of sensitive data during API integration. Common authentication methods include API keys, OAuth, and JSON Web Tokens (JWT).

API keys are unique identifiers issued to applications or users for authentication. They act as credentials and are typically included as parameters or headers in API requests. API keys validate the identity of the requestor and grant access based on the specified key.

OAuth, an industry-standard protocol, allows users to grant limited access to their resources without revealing their credentials. It involves obtaining access tokens and updating them periodically. OAuth's authorization flow separates the authentication process from access authorization, which increases security and allows users to control the amount of access granted.

JWT (JSON Web Tokens) is a compact and self-contained token format used for authentication and authorization. JWTs contain encoded information about the user or application and are signed to ensure integrity. They contain relevant information such as the user's identity, access permissions, and expiration times, and enable stateless authentication.

Challenges Related to Authentication and Authorization

Authentication and authorization can present certain challenges in API integration. Some common challenges are:

- Credential management: Proper storage and management of API keys, OAuth tokens, or JWT secrets is critical. It is very important to employ robust key management practices, secure storage mechanisms, and regular credential rotation to mitigate the risk of unauthorized access.

- Token management: Handling access tokens, monitoring their expiration, and ensuring seamless token updates can be complex. You may face the challenge of storing and transferring tokens securely and implementing efficient mechanisms for retrieving and updating tokens to ensure uninterrupted API access.

- Scope and granularity: APIs often require fine-grained control over the permissions and access rights granted to different users or applications. Balancing the need for flexibility with the complexity of managing granular permission rules can be challenging. You have probably dealt with defining and enforcing access policies to ensure that users or applications can access only the resources they need, while preventing unauthorized access.

Best Practices for Secure Authentication and Authorization

It's critical that you adhere to best practices for secure authentication and authorization during API integration. Here are some key practices:

- Use secure channels: Always transmit sensitive authentication data over secure channels such as HTTPS to protect it from eavesdropping and tampering. Encrypting communications ensures the confidentiality and integrity of authentication requests and responses.

- Implement strong identity and access management: Deploy robust identity and access management (IAM) solutions that enforce strong authentication mechanisms such as multi-factor authentication (MFA). IAM frameworks provide comprehensive user management, centralized access control, and auditing capabilities.

- Leveraging industry standards: Adherence to established standards such as OAuth and JWT promotes interoperability, ensures compatibility with multiple API providers, and benefits from the collective experience of the developer community.

- Regularly review and renew credentials: Review and change API keys, tokens, or secrets regularly to minimize the impact of potential security breaches. Implementing automated credential rotation mechanisms, such as scheduled key rotation or token expiration policies, helps maintain the security of your API integration.

By following these best practices, you can increase the security of your API integration, protect sensitive data, and reduce the risks associated with authentication and authorization issues.

Versioning and Backward Compatibility

Challenges Related to API Versioning and Compatibility

Managing versioning and ensuring backward compatibility can be complex. As APIs evolve and new features are introduced, maintaining compatibility is critical to prevent existing integrations from being broken. Some challenges that arise in this context are:

- Compatibility with existing clients: Existing clients that may have been developed using older versions of the API must continue to function seamlessly when API updates are introduced. It must be ensured that new versions do not introduce disruptive changes or unexpected behavior.

- Communication and documentation: Effectively communicating changes and updates to API customers can be challenging. Providing clear release notes and documentation highlighting behavioral changes, deprecated features, or new requirements is critical for developers to update their integrations accordingly.

- Dependency management: APIs often rely on third-party libraries or services that may have their own versioning schemes. Coordinating version updates between different dependencies can get complicated, especially when multiple integrations are involved.

Strategies for Managing Versioning and Backward Compatibility

To overcome these challenges and effectively manage API versioning and backward compatibility, developers can use the following strategies:

- Semantic versioning: The introduction of semantic versioning (SemVer) helps provide a clear and standardized approach to versioning. SemVer consists of major, minor, and patch versions and allows developers to accurately communicate the nature of changes. This approach helps API users understand the impact of version updates and make informed decisions about integration updates.

- Versioning via URL or header: Include version information in the API URL or header. By including a version identifier in the URL or headers, developers can ensure that clients can explicitly specify the desired API version when making requests. This allows clients to customize their integrations to specific versions while accommodating future updates.

- Robust testing and integration suites: Implement comprehensive testing strategies, including integration testing, to validate API compatibility and functionality across versions. Automation and continuous integration techniques can help identify compatibility issues early in the development cycle.

- Phase-out and deprecation periods: When introducing drastic changes, specify a deprecation period and a clear schedule for removing deprecated features. This allows developers using older versions to incrementally upgrade their integrations and reduce the impact on their applications.

- Documentation and communication: Maintain detailed and up-to-date documentation that clearly explains release policies, deprecated features, and upgrade paths. Establish effective communication channels, such as release notes and developer forums, to inform API customers of changes and provide support during the transition.

Introduction to Semantic Versioning and Its Benefits

Semantic versioning (SemVer) is a versioning scheme that provides a standardized and intuitive approach to API versioning. It consists of three components: major version, minor version, and patch version. Each component conveys specific information about the type of changes introduced with a new version.

- Major version: Increase the major version when introducing backward-incompatible changes. This means that significant changes have been made to the API that may require updates to client integrations.

- Minor version: increase the minor version if you are adding new features or functionality in a backward compatible manner. This indicates that new features have been added without affecting existing integrations.

- Patch version: Increase the patch version for backward-compatible bug fixes and minor updates that do not introduce new features or groundbreaking changes.

The introduction of semantic versioning brings several benefits to API developers and customers. It enables clear communication about the impact of version updates, making it easier for developers to understand the scope and nature of changes. Semantic versioning also helps API customers make informed decisions about when and how to update their integrations, minimizing the risk of unexpected behavior or erroneous changes. It also establishes an industry standard practice for versioning that promotes consistency and compatibility across different APIs.

By following these strategies and embracing semantic versioning, developers can effectively manage versioning and backward compatibility to ensure smooth transitions and minimize disruption to existing integrations.

Rate Limiting and Throttling

Understanding Rate Limiting and Throttling Concepts

Rate limiting and throttling mechanisms are essential for maintaining API stability, performance, and security. Rate limiting is the restriction on the number of requests a client can make to an API within a given time period. Throttling, on the other hand, regulates the speed at which requests are processed by the API server.

Rate limits are usually set by the API provider and are often based on factors such as the user's subscription level, the type of API endpoint, or the specific API function being used. These limits are put in place to prevent abuse, protect server resources, and ensure fair usage by all API customers. Throttling, on the other hand, controls the speed at which requests are processed to prevent server overload and maintain overall system performance.

Challenges and Consequences of Exceeding API Usage Limits

While rate limiting and throttling are necessary for maintaining a stable API ecosystem, exceeding these usage limits can have various challenges and consequences. When rate limits are exceeded, API requests can be denied or delayed, resulting in a degraded user experience. This can result in timeouts, error responses, or even complete denial of access to the API.

In addition, exceeding API usage limits may result in penalties or restrictions imposed by the API provider. These penalties may include temporary or permanent suspension of API access, throttling of requests, or additional charges for excessive usage. In addition to the immediate consequences, there may be reputational damage and loss of trust among API users if limits are consistently exceeded.

Techniques for Handling Rate Limits and Optimizing API Usage

One approach is to implement efficient request management strategies, such as batch processing or pagination, to minimize the number of API calls required. This reduces the risk of hitting rate limits while improving overall efficiency.

Closely monitoring API usage is another important technique. By keeping track of your API usage, you can proactively manage your rate limits and plan accordingly. This includes regularly reviewing API usage reports, understanding the limits, and adjusting your application's behavior to stay within the set limits.

In order to appropriately handle rate limit errors, implementing appropriate error handling mechanisms is essential. This includes retries with exponential backoff and implementing circuit breaking patterns to handle temporary rate limit overruns.

In addition, exploration of alternative APIs or other service tiers that offer higher rate limits may be considered. This may mean negotiating with the API provider for higher limits based on your specific use case or exploring other APIs that better meet your needs.

Overall, API users need to understand the concepts of rate limiting and throttling, be aware of the challenges and consequences of exceeding limits, and be able to implement optimization techniques to effectively manage their API usage and ensure smooth and reliable integration into the API ecosystem.

Error Handling and Resilience

Common Errors and Exceptions in API Integration

Working with APIs can sometimes lead to errors and exceptions. These problems can occur for a variety of reasons, including incorrect request parameters, network connectivity issues, server-side errors, or even authentication and authorization issues. It is important to have a clear understanding of the most common errors and exceptions that can occur during API integration.

Among the most common errors is 400 Bad Request, which means that the server cannot understand or process the request due to invalid syntax or missing parameters. Another common error is 401 Unauthorized, which means that the request does not contain valid authentication credentials. Also 404 Not Found can occur, which means that the requested resource does not exist on the server. These are just a few examples, and it is important to be familiar with the different error codes and their meaning in order to handle them effectively.

Importance of Proper Error Handling and Resilience Mechanisms

It is critical to implement adequate error handling and failover mechanisms. Error handling ensures that problems encountered during API integration are handled appropriately and appropriate actions are taken to notify users or downstream systems of the errors encountered. Resilience, on the other hand, refers to the ability of a system to recover from errors and continue to function reliably.

Proper error handling helps maintain the stability and reliability of your API integrations. It allows you to provide your customers with meaningful error messages that help troubleshoot and reduce support efforts. Effective error handling can also improve the overall user experience by providing helpful information and guidance when errors occur.

Failsafe mechanisms, such as retrying failed requests, implementing breakers, or applying fallback strategies, are essential to ensuring the reliability and availability of your API integrations. By anticipating and planning for potential failures, you can minimize downtime and prevent cascading failures that can have a significant impact on your systems and users.

Implementing Effective Error Handling Strategies

Implementing effective error handling strategies is critical to the stability and reliability of your integrations. The following are some important considerations for implementing such strategies:

- Thoroughly understand the API documentation: Familiarize yourself with the API documentation to understand the expected behavior, error codes, and error messages returned by the API. With this knowledge, you can handle errors appropriately and provide relevant information to users.

- Proper handling of error responses: When an error occurs, make sure you analyze the error response and extract meaningful information from it. Use this information to create clear and concise error messages that can provide guidance to users or downstream systems on how to fix the problem.

- Ensure error logging and monitoring: Implement robust error logging and monitoring mechanisms to capture and track errors. This allows you to identify patterns, track recurring issues, and proactively address potential problems.

- Implement retries and exponential backoff: When transient errors occur, implement a retry mechanism with an exponential backoff strategy. This approach enables automatic retries while minimizing API impact and avoiding overload from repeated failed requests.

- Decent handling of rate limiting and throttling: APIs often impose rate limits or implement throttling mechanisms to protect their resources. It is critical that these scenarios are handled sensibly by adhering to the prescribed limits and implementing strategies to handle errors when the rate limit is exceeded.

- Communicate effectively with API providers: Create open lines of communication with API providers. Reach out to them when errors occur and ask for guidance or support. Building a strong relationship with the API provider can lead to faster resolution of issues and better collaboration.

By implementing these effective error handling strategies, you can ensure that your API integrations remain stable and reliable and provide a seamless experience for your users and downstream systems.

Best Practices for Overcoming API Integration Challenges

Importance of Comprehensive and Up-to-Date API Documentation

Comprehensive and up-to-date API documentation plays a critical role in successful integrations. Clear and detailed documentation not only provides valuable insight into the functionality of the API, but also helps you understand how to interact with it effectively. Comprehensive documentation covers all essential aspects of the API, including endpoints, request/response formats, authentication methods, error handling, and any specific nuances or limitations.

Having up-to-date API documentation is equally important. APIs evolve over time with updates, new features, and bug fixes. Outdated documentation can lead to confusion and inefficiency, which in turn leads to integration issues. Be vigilant and ensure that the documentation you rely on is regularly maintained and reflects the current state of the API. This includes documenting any changes or deprecations to endpoints, updated request/response formats, and changes to authentication mechanisms.

Tools and Techniques for Exploring APIs

It's always worth considering new tools and techniques that can improve your workflow and help you manage integration issues more efficiently.

API discovery tools such as Postman, Insomnia, or cURL remain invaluable for testing API endpoints, making requests, and checking responses. These tools provide features such as request history, parameter management, and response visualization that allow you to explore API functions more deeply.

Also, consider using API documentation tools such as Theneo. Theneo and tools like Theneo provide machine-readable specifications and allow you to automatically create client SDKs, server stubs and interactive API documentation. By using these tools and techniques, you can streamline your API discovery process, reduce manual errors, and improve overall integration efficiency.

Integration Testing and Monitoring

Significance of Thorough Integration Testing

Effective testing ensures that your integrations are robust, reliable, and resilient to changes in the API ecosystem. By performing comprehensive integration testing, you can identify potential issues early in the development process and mitigate risks before they impact your production environment.

To perform thorough integration testing, it's important to cover different scenarios, including positive and negative test cases. Positive tests verify that the API works correctly under normal conditions, while negative tests help uncover potential edge cases and validate error handling mechanisms. You should also test different input combinations, evaluate performance under different loads, and evaluate the API's compatibility with different environments.

Implement Automated Testing and Monitoring for APIs

When it comes to integration testing, automation can greatly increase efficiency and accuracy. By using tools such as testing frameworks, scripting languages, and continuous integration/continuous deployment (CI/CD) pipelines, you can automate test case execution, generate test reports, and ensure consistent and repeatable testing processes.

In addition, implementing API monitoring solutions is critical to maintaining the health and performance of your integrations. With real-time monitoring tools, you can track API uptime, response times, error rates and other key metrics. Proactive monitoring alerts you to potential issues before they impact your users, so you can take timely action to avoid disruption. By integrating monitoring into your API ecosystem, you can identify performance bottlenecks, detect anomalies, and make data-driven decisions to optimize your integrations.

Monitoring API Performance and Health

Monitoring API performance and health is a fundamental aspect of maintaining successful integrations. By continuously monitoring the performance of the APIs you integrate with, you can identify and address issues such as slow response times, high error rates, or data retrieval inconsistencies.

To effectively monitor the performance and health of APIs, consider using monitoring tools that provide comprehensive metrics and insights. These tools can track key performance indicators (KPIs) such as response time, latency, throughput and error rates. They can also help you identify trends, correlate performance with external factors and set up alerts for critical thresholds. By proactively monitoring API performance, you can ensure optimal user experiences, meet service level agreements (SLAs), and identify areas in need of optimization.

Prioritizing comprehensive and up-to-date API documentation, effectively exploring APIs, conducting thorough integration testing, and implementing automated testing and monitoring can help you overcome challenges with confidence. Monitoring API performance and health ensures the continued success of your integrations and enables you to deliver reliable, high-performing solutions to your users.

Implementing Middleware and Integration Layers

Middleware and integration layers play a critical role in the successful implementation of APIs. These layers act as intermediaries between different components, systems, or services, facilitating seamless communication and data exchange. Middleware acts as a bridge, providing a standardized interface and enabling interoperability between different systems. Integration layers, on the other hand, focus on integrating different systems, ensuring data consistency and streamlining business processes. By leveraging middleware and integration layers, enterprises can achieve greater flexibility, scalability, and efficiency in API integration.

Benefits of Using Middleware for API Integration

Using middleware for API integration brings several benefits to organizations that have extensive experience working with APIs. First, middleware simplifies the complexity of integration by abstracting the underlying technical details and allowing developers to focus on developing the core functionality of their applications. It also promotes code reusability and modularity because middleware components can be shared across different APIs and projects.

In addition, middleware provides a central point of control and management for API integrations and enables efficient monitoring, logging and error handling. It facilitates enforcement of security policies, data transformations, and protocol translations, and ensures seamless interoperability between systems with different architectures and technologies.

In addition, middleware solutions often provide advanced features such as message queuing, event-driven architecture and caching mechanisms that can improve the performance, reliability and scalability of API integrations. These benefits collectively contribute to accelerated development cycles, reduced maintenance requirements, and improved overall system stability.

Popular Middleware Solutions and Frameworks

There are several popular middleware solutions and frameworks that can help in creating robust and scalable integrations. Some of the notable options are:

- Apache Kafka: Kafka is a distributed streaming platform that excels in processing data streams with high throughput, fault tolerance and real-time. It offers a scalable pub/sub architecture, making it suitable for building event-driven systems and implementing reliable API integrations.

- MuleSoft Anypoint Platform: The Anypoint Platform provides a comprehensive set of middleware tools and services for API design, integration, management and analysis. It provides a unified platform for building, deploying, and monitoring APIs and enables seamless connectivity between different applications, services, and data sources.

- Spring Integration: Spring Integration is based on the popular Spring Framework and provides a lightweight yet powerful integration solution. It provides a set of components, patterns and connectors for building message-driven systems, processing data transformations and orchestrating complex workflows in API integrations.

- Microsoft Azure Logic Apps: Logic Apps is a cloud-based integration service offered by Microsoft Azure. It provides a visual designer and a wide range of connectors to create workflows and orchestrations that connect APIs, SaaS applications and on-premises systems. Logic Apps provides robust monitoring, debugging and error handling capabilities.

- IBM Integration Bus: IBM Integration Bus (formerly known as IBM WebSphere Message Broker) is an enterprise integration solution designed to connect applications, services and data across different platforms and protocols. It provides a wide range of connectors, transformation capabilities, and message routing features to enable seamless API integration in complex environments.

These middleware solutions and frameworks are just a few examples, and there are many more on the market. The choice of middleware depends on the specific project requirements, existing technology stack, scaling requirements and integration complexity. API developers should carefully review the features, capabilities, and community support of the various middleware options before selecting the one best suited for their API integration needs.

Implementing Retry and Circuit Breaker Patterns

Understanding Retry and Circuit Breaker Patterns

Retry and interrupt patterns are essential techniques for building resilient API integrations, especially in the face of transient outages and network problems. In the retry pattern, multiple attempts are made to execute a failed operation or request with the goal of eventually succeeding or providing a reliable fallback mechanism. On the other hand, the circuit-breaker pattern helps prevent cascading failures by intelligently handling repeated failures and temporarily "breaking" the circuit to prevent further requests until the system stabilizes.

Handling Transient Failures and Network Issues

API integrations often experience transient outages and network issues that can disrupt expected operations. Transient outages refer to temporary issues that prevent successful communication, such as network timeouts, temporary unavailability of services, or server errors. Implementing a retry mechanism allows the integration layer to automatically retry failed operations after a short delay or with an incremental backoff strategy. Retrying requests can increase the chances of success when transient problems resolve themselves.

Network issues such as high latency, packet loss, or network congestion can also affect API integrations. To mitigate the impact of these issues, implementing the circuit breaker pattern is beneficial. The circuit breaker monitors the success and failure rates of API calls and triggers when the failure rate exceeds a predefined threshold. When the circuit is open, subsequent requests are immediately rejected to prevent error propagation. After a certain period of time, the circuit enters a semi-open state that allows a limited number of requests to test the stability of the system. If these requests are successful, the circuit is closed again, otherwise it is reopened.

Applying Retry and Circuit Breaker Patterns in API Integration

To effectively apply retry and circuit-breaker patterns, API developers can use various libraries and frameworks that provide built-in support for these patterns. For example, libraries such as Polly for . NET or resilience4j for Java provide comprehensive support for implementing retry and circuit-breaker policies with configurable options.

When implementing retries, factors such as the maximum number of retries, backoff strategies, and handling exceptions and error codes must be considered. When implementing circuit breakers, appropriate thresholds, timeout periods, and strategies for transitioning between open, closed, and half-open states must also be defined.

To monitor the effectiveness of retry and interrupt patterns, it is advisable to incorporate appropriate logging and monitoring mechanisms. This helps identify error patterns, optimize configuration, and provides valuable insights into the stability and performance of API integrations.

By incorporating retry and interrupt patterns into API integrations, developers can significantly improve the resilience and reliability of their systems. These patterns mitigate the impact of transient outages, network issues, and system congestion, ensuring smooth operations and an improved end-user experience.

Caching and Data Synchronization

Utilizing Caching Mechanisms to Improve API Performance

Caching plays an important role in improving API performance. Caching involves storing data that is frequently accessed in a temporary storage layer so that the data does not need to be repeatedly retrieved from the original source. By implementing an effective caching mechanism, you can significantly shorten response time and reduce the load on your API servers.

To take advantage of caching, it is important to identify the right data elements for caching. This typically includes static or infrequently changing data that is shared across multiple API requests. Examples include reference data, configuration settings, or frequently used database queries. By storing such data in a cache, subsequent requests can be served directly from the cache without the need to perform expensive operations or query the underlying data source.

When implementing caching, factors such as cache expiration policies, swapping strategies, and cache invalidation mechanisms must be considered. Time- or event-based expiration policies ensure that cached data stays fresh, while swap strategies help control memory usage by removing less frequently accessed items. In addition, cache invalidation techniques such as cache tags or cache keys allow you to update or remove specific cache entries as the underlying data changes.

Implementing Data Synchronization Strategies for Distributed Systems

In distributed systems involving multiple API servers or microservices, ensuring data consistency and synchronization becomes a critical factor. Implementing effective data synchronization strategies helps harmonize data across these components and maintain a coherent view of the system.

A common approach is to use distributed consensus protocols such as the Raft or Paxos algorithms. These protocols allow multiple nodes to agree on the state of shared data by coordinating their actions over a series of communication rounds. They provide fault tolerance and ensure consistency even in the face of failures or network interruptions. However, it's important to evaluate the tradeoffs, as consensus protocols can introduce additional complexity and latency into the system.

Alternatively, an event-driven architecture can facilitate data synchronization in distributed systems. Events represent state changes or important actions within the system and can be forwarded to a message broker or event bus. Subscribed components can respond to these events and update their local data accordingly. This approach provides loose coupling between components and allows for eventual consistency, but requires careful design and consideration of event sequencing, delivery guarantees, and potential event loss scenarios.

Choosing Appropriate Caching and Synchronization Approaches

When it comes to choosing caching and synchronization approaches for your APIs, there is no one-size-fits-all solution. The optimal choice depends on a number of factors, including the nature of your data, expected usage patterns, and the performance requirements of your API.

For caching, you should use a combination of in-memory caches, distributed caches and content delivery networks (CDNs). In-memory caches, such as Redis or Memcached, are great for accessing low-latency data that is accessed frequently. Distributed caches, such as Hazelcast or Apache Ignite, provide scalability and fault tolerance across multiple nodes. CDNs are beneficial for caching static content or serving geographically dispersed users.

As for synchronization, you need to evaluate the requirements of your distributed system. If strong consistency is paramount, consensus protocols such as Raft or Paxos may be a good choice. On the other hand, if eventual consistency is enough, event-driven architectures with message brokers such as Apache Kafka or RabbitMQ offer flexibility and scalability.

Ultimately, the choice of caching and synchronization approaches should be based on your specific API use cases and performance goals. Consider performing performance testing and analysis to evaluate the impact of different strategies on your system's responsiveness, scalability, and data integrity.